Is there a clean & easy way to set up a test for a Channels Worker process?

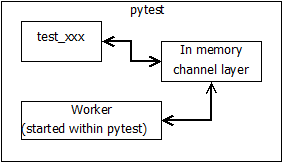

In the ideal world, I could set up a test and run the (async) worker processes in the same event loop that pytest uses, communicating among those workers using the InMemoryChannelLayer.

I’m trying to use pytest. That may or may not be the wrong choice here.

Side note 1: This is the first time I’ve ever tried writing real tests for a real system. This will become more apparent if I need to ask follow-up questions on any information supplied.

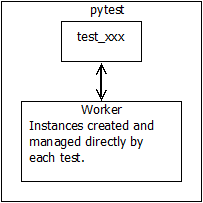

I’ve tried configuring a fixture to start the worker process, but (appropriately enough) it doesn’t return so the tests don’t proceed.

I tried launching the test in a separate thread, but that throws an error about not having an event loop.

I tried creating a custom version of the runworker command’s handle method to pass the main thread’s event loop to it. At the time the fixure runs, there is no event loop.

I’ve backed off this idea for the sake of making progress with the tests themselves.

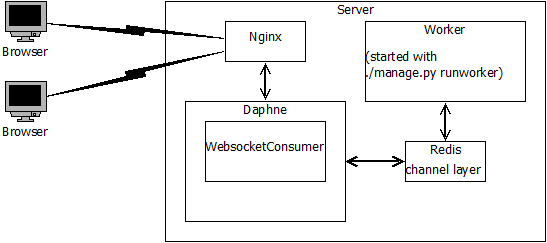

What I’m currently doing is running the tests against a separate runworker process through the redis channel layer. It works, and it’s actually quite satisfactory for what I am really trying to accomplish with this.

(That also means that I don’t have the code handy to show what I’ve tried. I can pull a copy for anyone wanting a good laugh.)

Side note 2: I’m not sending messages to the workers directly. The workers are driven by an AsyncWebsocketConsumer. In the real application, the browser sends the message to the consumer. The consumer decides which worker will handle each message, and effectively forwards the message to the appropriate worker. So my test creates the instance of the WebsocketCommunicator and sends the messages to it, allowing it to forward the messages as appropriate.

In the ideal world, I would have tests that put messages directly in the channel layer for each worker, testing them independently of the consumer, but that’s a different issue.

All thoughts, ideas, comments, suggestions, recommendations, conjectures, wild guesses, etc are appreciated. Given the current state of the project this is as much an academic exercise as anything else. I can continue with the process I have now and not lose any functionality that I’m concerned about.